The Ultimate AI for Segmenting Anything, Anywhere

- Published on

- Published on

- Blog Post views

- ... Views

- Reading time

- 5 min read

Have you ever wished for an AI that could identify and outline any object in an image? Enter META's Segment Anything Model (SAM). It's not just another AI tool.

What is SAM?

SAM is an AI model that can segment any object in an image. But what does that mean? Imagine pointing at an object in a photo. SAM will outline it for you. It's that simple, yet incredibly powerful.

META introduced SAM in 2023, and since then, it has taken the AI world by storm. Its versatility, efficiency, and accuracy have made it a favourite among researchers and developers alike.

How Does SAM Work?

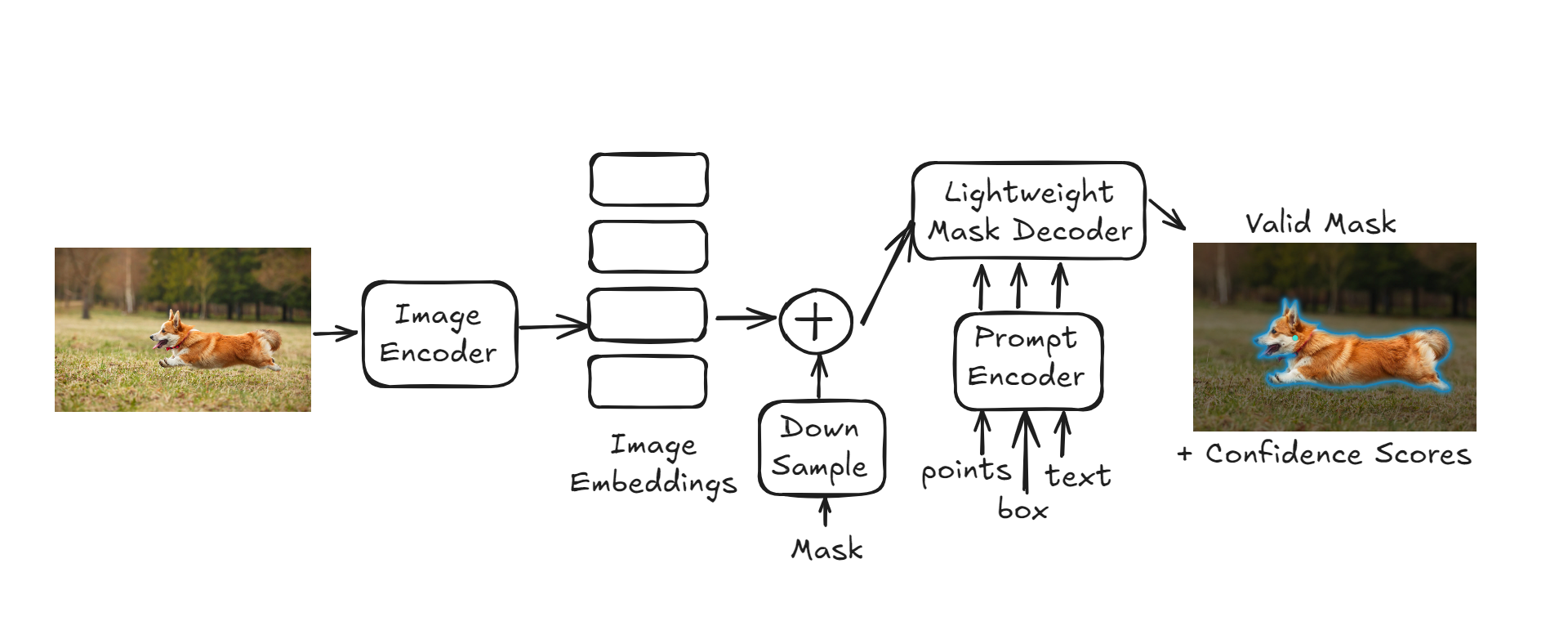

SAM uses a unique approach that combines three key elements:

- A powerful image encoder

- A prompt encoder

- A lightweight mask decoder

Here's the process in action:

- The image encoder analyzes the entire image, creating a detailed representation.

- You provide a prompt (like a point, box, or even text).

- The prompt encoder processes your input, translating it into a format SAM can use.

- The mask decoder uses both the image representation and the encoded prompt to create a precise mask of the object.

This process happens in real-time, making SAM not just accurate, but also incredibly fast.

Why is SAM Revolutionary?

SAM isn't just another AI model. It's a leap forward in computer vision technology. Here's why:

- Versatility: SAM can segment anything. From cats to cars, flowers to buildings, it handles diverse objects with ease.

- Zero-shot learning: Unlike traditional models, SAM doesn't need specific training for new objects. It can segment objects it has never seen before.

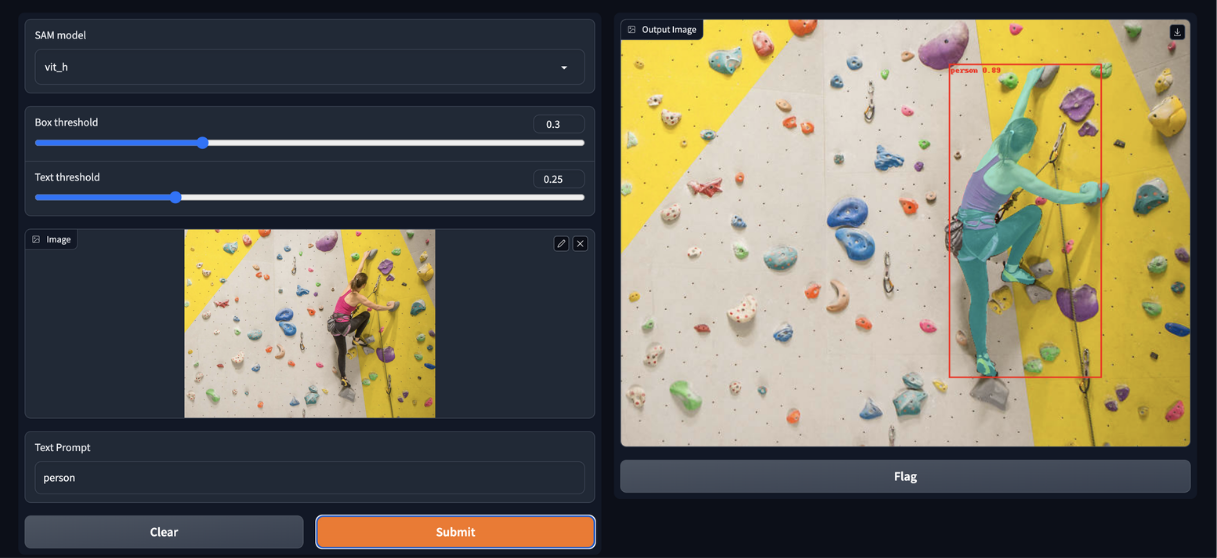

- Interactivity: You guide SAM with simple prompts. Point, draw a box, or even describe the object in text. This flexibility makes it user-friendly and adaptable.

- Speed: SAM works in real-time. No lag, no waiting. This makes it perfect for applications that need quick responses.

- Accuracy: Its segmentations are precise and reliable, even in complex scenes.

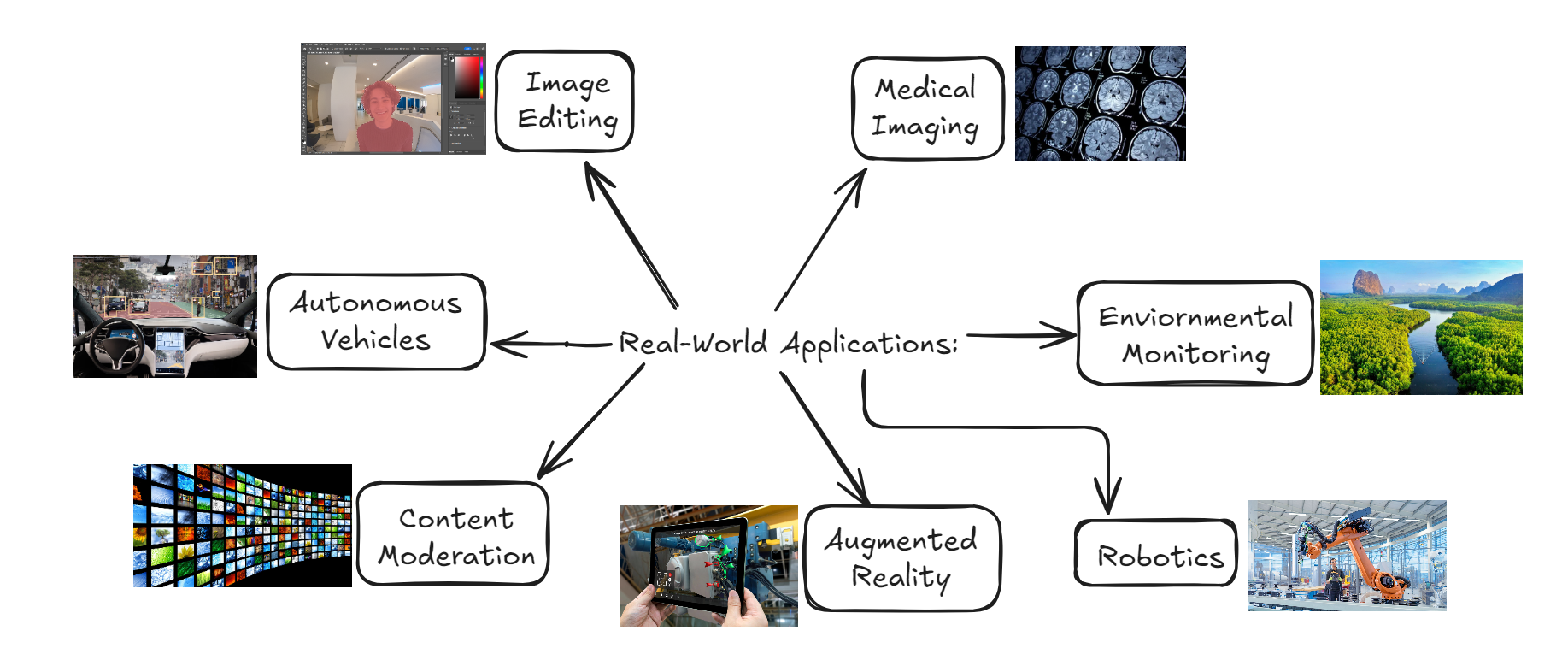

These features open up a world of possibilities. From image editing to autonomous vehicles, medical imaging to augmented reality, SAM's potential applications are vast and diverse.

Technical Deep Dive

Let's take a closer look. SAM's design is a wonder of AI engineering, using several advanced techniques.

The Image Encoder

SAM uses a Vision Transformer (ViT) as its backbone. This encoder:

- Splits the image into patches

- Processes each patch independently

- Combines the results for a global view

The result is a rich, detailed representation of the entire image. This approach allows SAM to capture both fine details and broader context, crucial for accurate segmentation.

The Prompt Encoder

One of SAM's unique features is its ability to handle various types of prompts:

- Points

- Boxes

- Masks

- Text

Each type of prompt has its own encoding process. The encoder translates your input into a format SAM can use, allowing for flexible and intuitive interactions.

The Mask Decoder

This is where the magic happens. The decoder:

- Takes the image representation

- Combines it with the prompt encoding

- Generates a binary mask

It uses a series of transformer layers to refine the mask prediction iteratively. This process allows SAM to produce highly accurate segmentations, even for complex or partially obscured objects.

SAM in Action: Real-World Applications

SAM isn't just a lab experiment. It's making waves in various fields:

- Image Editing: Photoshop professionals can use SAM for precise object selection, streamlining their workflow.

- Medical Imaging: SAM can help identify tumours or anomalies in scans, potentially improving diagnostic accuracy.

- Autonomous Vehicles: By detecting and segmenting road elements, SAM can enhance the perception systems of self-driving cars.

- Augmented Reality: SAM's ability to segment objects in real time makes it ideal for seamlessly blending virtual objects with the real world.

- Robotics: SAM can help robots identify and interact with objects in their environment, improving their ability to navigate and manipulate the world around them.

- Content Moderation: Social media platforms can use SAM to automatically detect and flag inappropriate content in images.

- Environmental Monitoring: SAM can assist in analyzing satellite imagery to track deforestation, urban development, or changes in wildlife habitats.

The possibilities are endless. As developers integrate SAM into their workflows, we'll likely see even more innovative uses emerge.

Challenges and Limitations

Despite its power, SAM isn't perfect. It faces some challenges:

- Computational Demand: SAM requires significant processing power, which can be a limitation for some applications.

- Edge Cases: Highly complex or unusual scenes can sometimes trip up the model.

- Ambiguity: In some cases, what constitutes an "object" isn't clear, leading to potential inconsistencies in segmentation.

- Fine Details: While generally accurate, SAM might miss extremely fine details in some instances.

- Context Understanding: SAM excels at segmentation but doesn't inherently understand the context or meaning of the objects it segments.

Researchers are actively working on these issues. Each update brings improvements and refinements, pushing the boundaries of what's possible in computer vision.

The Future of SAM

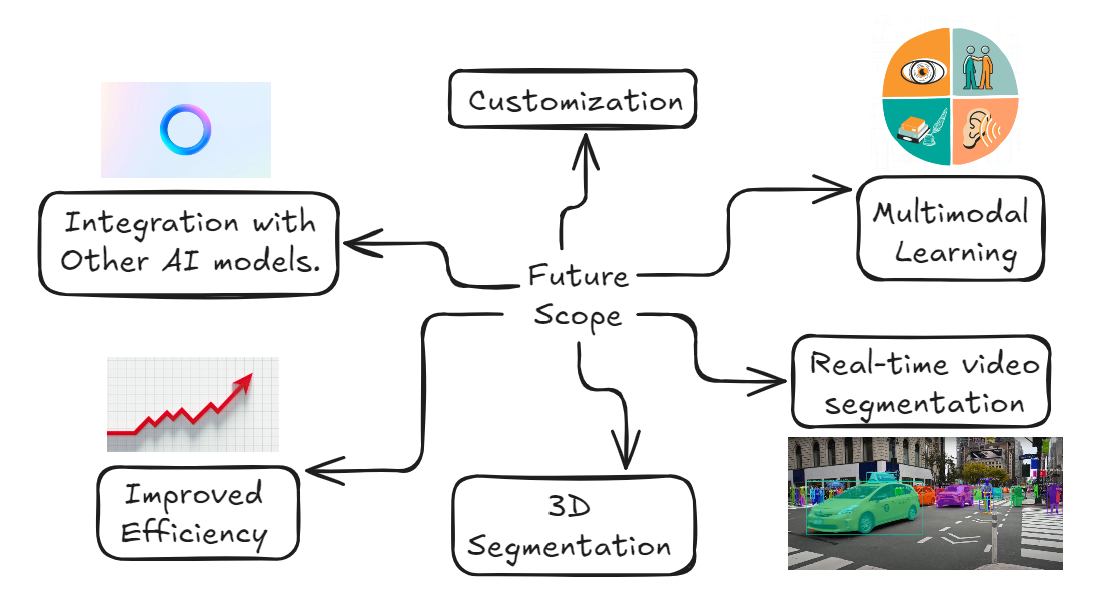

What's next for SAM?

- Integration with Other AI Models: Combining SAM with language models could lead to more intuitive, conversation-like interactions for image segmentation.

- Improved Efficiency: Researchers are working on making SAM faster and less resource-intensive, potentially allowing it to run on mobile devices.

- 3D Segmentation: Extending SAM's capabilities to three-dimensional data could revolutionize fields like medical imaging and 3D modelling.

- Real-time Video Segmentation: Applying SAM's power to moving images could enhance video editing, surveillance, and motion capture technologies.

- Customization: Allowing fine-tuning for specific domains or tasks could make SAM even more versatile and accurate in specialized applications.

- Multi-modal Learning: Incorporating other types of data, like audio or sensor readings, could lead to more robust and context-aware segmentation.

Getting Started with SAM

Ready to try SAM? Here's how you can get started:

- Official Repository: Check out META's GitHub for code and documentation. This is your starting point for understanding SAM's architecture and implementation.

- Pre-trained Models: Download and use pre-trained SAM models. These are great for getting up and running quickly or for use in transfer learning.

- API Integration: Various AI platforms and APIs offer SAM integration, making it easier to incorporate into existing projects.

- Custom Training: For advanced users, fine-tuning SAM for specific tasks can yield even better results in specialized domains.

- Community Resources: Join forums and community groups to share experiences, get help, and stay updated on the latest developments.

Powering SAM with Decentralized Computing

Running advanced AI models like SAM often requires substantial computational resources. This is where decentralized computing solutions come into play. Platforms like the Spheron Network offer Decentralized Computing options that are well-suited for AI Workloads like SAM.

Spheron provides Scalable Infrastructure that can grow with your needs, whether you're experimenting with SAM or deploying it at scale. Their Cost Efficient Compute options make it feasible to run resource-intensive models without breaking the bank. Plus, Spheron's Simplified Infrastructure approach means you can focus on your AI projects rather than managing complex backend systems.

Conclusion

META's Segment Anything Model is more than just an AI tool. It's a glimpse into the future of computer vision. From professional applications to everyday use, SAM is changing how we interact with and understand images.

Try it out —

https://sam2.metademolab.com/demo

https://segment-anything.com/demo

So, what will you create with SAM?